vi configmap-dev.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: config-dev

namespace: default

data:

DB_URL: localhost

DB_USER: myuser

DB_PASS: mypass

DEBUG_INFO: debug

vi deployment-config01.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: configapp

labels:

app: configapp

spec:

replicas: 1

selector:

matchLabels:

app: configapp

template:

metadata:

labels:

app: configapp

spec:

containers:

- name: testapp

image: nginx

ports:

- containerPort: 8080

env:

- name: DEBUG_LEVEL # 컨테이너 안에서의 변수명

valueFrom:

configMapKeyRef:

name: config-dev

key: DEBUG_INFO

---

apiVersion: v1

kind: Service

metadata:

labels:

app: configapp

name: configapp-svc

namespace: default

spec:

type: NodePort

ports:

- nodePort: 30800

port: 8080

protocol: TCP

targetPort: 80

selector:

app: configapp

도커 이미지 충돌 방지를 위해 디폴트 경로를 내 도커 허브로 설정한다.

docker login

kubectl patch -n default serviceaccount/default -p '{"imagePullSecrets":[{"name": "hyun141"}]}'쿠버네티스에서 이미지 가져올 때 도커의 아이디를 이용해서 가지고 오게 한다.

not found 뜬다.

kubectl create secret generic hyun141 --from-file=.dockerconfigjson=/root/.docker/config.json --type=kubernetes.io/dockerconfigjson

kubectl describe serviceaccount default -n default

로 확인해 본다.

개인 레지스트리 => 그냥 이미지로 바꾼다.

[root@master configmap]# kubectl exec -it configapp-c9bb7b748-lxpgq -- bash

root@configapp-c9bb7b748-lxpgq:/# env

아까 사용했던 환경 변수 DEBUG_LEVEL을 볼 수 있다.

kubectl delete pod,deploy,svc --all

vi configmap-wordpress.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: config-wordpress

namespace: default

data:

MYSQL_ROOT_HOST: '%'

MYSQL_ROOT_PASSWORD: kosa04041

MYSQL_DATABASE: wordpress

MYSQL_USER: wpuser

MYSQL_PASSWORD: wppass

vi mysql-pod-svc.yaml

apiVersion: v1

kind: Pod

metadata:

name: mysql-pod

labels:

app: mysql-pod

spec:

containers:

- name: mysql-container

image: mysql:5.7

envFrom: #컨피그맵 설정 전체를 한꺼번에 불러와서 사용하기

- configMapRef:

name: config-wordpress #컨피그맵의 이름만 입력하면 된다.

ports:

- containerPort: 3306

---

apiVersion: v1

kind: Service

metadata:

name: mysql-svc

spec:

type: ClusterIP

selector:

app: mysql-pod

ports:

- protocol: TCP

port: 3306

targetPort: 3306

vi wordpress-pod-svc.yaml

apiVersion: v1

kind: Pod

metadata:

name: wordpress-pod

labels:

app: wordpress-pod

spec:

containers:

- name: wordpress-container

image: wordpress

env:

- name: WORDPRESS_DB_HOST #변수

value: mysql-svc:3306

- name: WORDPRESS_DB_USER

valueFrom:

configMapKeyRef:

name: config-wordpress

key: MYSQL_USER

- name: WORDPRESS_DB_PASSWORD

valueFrom:

configMapKeyRef:

name: config-wordpress

key: MYSQL_PASSWORD

- name: WORDPRESS_DB_NAME

valueFrom:

configMapKeyRef:

name: config-wordpress

key: MYSQL_DATABASE

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: wordpress-svc

spec:

type: LoadBalancer

# externalIPs:

# - 192.168.56.105

selector:

app: wordpress-pod

ports:

- protocol: TCP

port: 80

targetPort: 80

watch를 앞에 붙이면 모니터링 기능 - 옆에 시간이 뜬다.

외부 ip를 입력하면 wordpress로 접속이 되는데 난..왜안되지ㅠㅠ

vi mysql-deploy-svc.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: mysql-deploy

labels:

app: mysql-deploy

spec:

replicas: 1

selector:

matchLabels:

app: mysql-deploy

template:

metadata:

labels:

app: mysql-deploy

spec:

containers:

- name: mysql-container

image: mysql:5.7

envFrom:

- configMapRef:

name: config-wordpress

ports:

- containerPort: 3306

---

apiVersion: v1

kind: Service

metadata:

name: mysql-svc

spec:

type: ClusterIP

selector:

app: mysql-deploy

ports:

- protocol: TCP

port: 3306

targetPort: 3306

혼선 방지를 위해 mysql-pod와 wordpress-pod를 삭제한다.

mys

vi wordpress-deploy-svc.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: wordpress-deploy

labels:

app: wordpress-deploy

spec:

replicas: 1

selector:

matchLabels:

app: wordpress-deploy

template:

metadata:

labels:

app: wordpress-deploy

spec:

containers:

- name: wordpress-container

image: wordpress

env:

- name: WORDPRESS_DB_HOST

value: mysql-svc:3306

- name: WORDPRESS_DB_USER

valueFrom:

configMapKeyRef:

name: config-wordpress

key: MYSQL_USER

- name: WORDPRESS_DB_PASSWORD

valueFrom:

configMapKeyRef:

name: config-wordpress

key: MYSQL_PASSWORD

- name: WORDPRESS_DB_NAME

valueFrom:

configMapKeyRef:

name: config-wordpress

key: MYSQL_DATABASE

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: wordpress-svc

spec:

type: LoadBalancer

# externalIPs:

#- 192.168.2.0

selector:

app: wordpress-deploy

ports:

- protocol: TCP

port: 80

targetPort: 80

namespace

쿠버네티스 클러스터 하나를 여러 개 논리적인 단위로 나눠서 사용하는 것

워크스페이스의 역할을 한다.

*external ip는 이미 metallb로 들어가있으므로 굳이 필요없다

root@master ~]# kubectl run nginx-pod1 --image=nginx -n test-namespace

pod/nginx-pod1 created

[root@master ~]# kubectl get po -n test-namspac

No resources found in test-namspac namespace.

[root@master ~]# kubectl get po -n test-namspace

No resources found in test-namspace namespace.

[root@master ~]# kubectl get po -n test-namspace

No resources found in test-namspace namespace.

[root@master ~]# kubectl get po -n test-namespace

NAME READY STATUS RESTARTS AGE

nginx-pod1 1/1 Running 0 29s

[root@master ~]# kubectl config set-context test-namepsace

Context "test-namepsace" created.

[root@master ~]# kubectl delete context test-namepsace

error: the server doesn't have a resource type "context"

[root@master ~]# kubectl delete get-context test-namepsace

error: the server doesn't have a resource type "get-context"

[root@master ~]# kubectl delete test-namepsace

error: the server doesn't have a resource type "test-namepsace"

[root@master ~]# kubectl delete context test-namepsace

error: the server doesn't have a resource type "context"

[root@master ~]# kubectl config set-context test-namespace

Context "test-namespace" created.

[root@master ~]# kubectl config set-context kubernetes-admin@kubernetes --namespace=test-namespace

Context "kubernetes-admin@kubernetes" modified.

[root@master ~]# kubectl config get-contexts kubernetes-admin@kubernetes

CURRENT NAME CLUSTER AUTHINFO NAMESPACE

* kubernetes-admin@kubernetes kubernetes kubernetes-admin test-namespace

[root@master ~]# kubectl expose pod nginx-pod1 --name loadbalancer --type=LoadBalancer --external-ip 192.168.0.50 --port=80

service/loadbalancer exposed

[root@master ~]# kubectl get all

NAME READY STATUS RESTARTS AGE

pod/nginx-pod1 1/1 Running 0 6m7s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/loadbalancer LoadBalancer 10.100.160.206 192.168.0.50,192.168.0.50 80:30155/TCP 14s

[root@master ~]# kubectl delete svc loadbalancer

service "loadbalancer" deleted

[root@master ~]# kubectl get all

NAME READY STATUS RESTARTS AGE

pod/nginx-pod1 1/1 Running 0 6m52s

[root@master ~]# kubectl expose pod nginx-pod1 --name loadbalancer --type=LoadBalancer --port=80

service/loadbalancer exposed

[root@master ~]# curl 192.168.0.60

^C

[root@master ~]# curl 192.168.0.50

<html>

<head><title>503 Service Temporarily Unavailable</title></head>

<body>

<center><h1>503 Service Temporarily Unavailable</h1></center>

<hr><center>nginx/1.17.8</center>

</body>

</html>

[root@master ~]# kubectl delete namespaces test-namespace

namespace "test-namespace" deleted

[root@master ~]# kubectl config set-context kubernetes-admin@kubernetes --namespace=

Context "kubernetes-admin@kubernetes" modified.namespace를 비워 두면 default로 지정하게 된다.

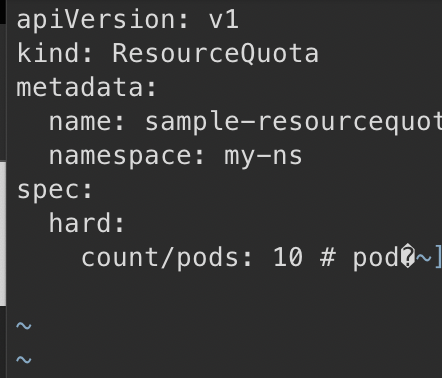

대충 resourcequota 디렉터리 만들어서 들어간 뒤,

vi sample-resourcequota.yaml

apiVersion: v1

kind: ResourceQuota

metadata:

name: sample-resourcequota

namespace: my-ns

spec:

hard:

count/pods: 5 # pod의 최대 개수[root@master ~]# kubectl apply -f sample-resourcequota.yaml

6개부터 리미트에 걸리게 된다.

[root@master resourcequota]# kubectl edit resourcequotas sample-resourcequota -n my-ns혹은 이렇게 고쳐도 된다.

10개로 고친 후 다시 6번째 pod을 만들려고 하면 된다.

미리 채운 상태에서 용량을 줄여버리면 초과하게 된다.

되긴 됨

[root@master resourcequota]# vi sample-resourcequota-usable.yaml

apiVersion: v1

kind: ResourceQuota

metadata:

name: sample-resourcequota-usable

spec:

hard:

requests.memory: 2Gi # pod 하나에 대한 용량 제한

requests.storage: 5Gi

sample-storageclass.storageclass.storage.k8s.io/requests.storage: 5Gi

requests.ephemeral-storage: 5Gi

requests.nvidia.com/gpu: 2

limits.cpu: 4

limits.ephemeral-storage: 10Gi # 임시 스토리지 - 컨테이너가 지워지면 함께 지워진다.

limits.nvidia.com/gpu: 4[root@master resourcequota]# vi sample-resourcequota-usable.yaml

[root@master resourcequota]# kubectl apply -f sample-resourcequota-usable.yaml

resourcequota/sample-resourcequota-usable configured

[root@master resourcequota]# kubectl get resourcequotas

NAME AGE REQUEST LIMIT

sample-resourcequota-usable 78s requests.ephemeral-storage: 0/5Gi, requests.memory: 0/2Gi, requests.nvidia.com/gpu: 0/2, requests.storage: 1Mi/5Gi, sample-storageclass.storageclass.storage.k8s.io/requests.storage: 0/5Gi limits.cpu: 0/4, limits.ephemeral-storage: 0/10Gi, limits.nvidia.com/gpu: 0/4

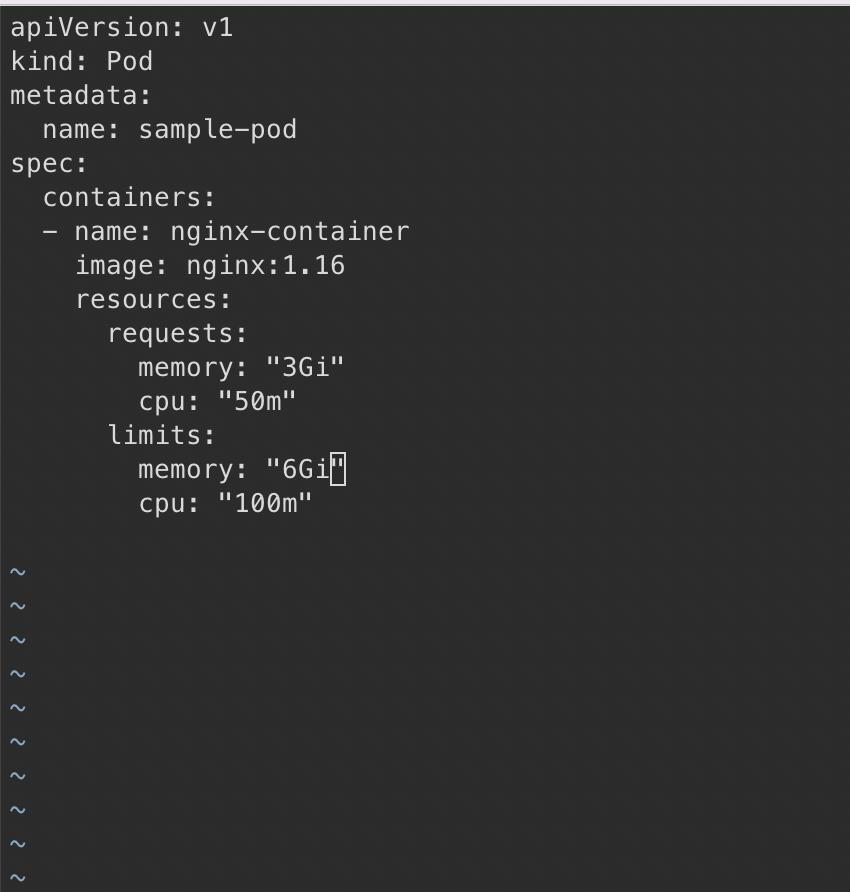

sample-pod.yaml에서 아래 부분 resource~ 추가한다.

cpu 100m = 100 milicore

1000 milicore = 1 core

용량을 3g- 6g로 늘려본다. - 에러 난다. - 자원을 고칠 수가 없다

지우고 새로 만들어야 한다.

LimitRange

vi sample-limitrange-container.yaml

apiVersion: v1

kind: LimitRange

metadata:

name: sample-limitrange-container

namespace: default

spec:

limits: #최대 2개 pod 생성, 1개 더하면 생성 안된다

- type: Container #컨테이너에 대한

default: #기본 최대 Limits, 정한 리소스 부족하면 컨테이너가 생성되지 X

memory: 512Mi

cpu: 500m

defaultRequest: #직접 정하지 않은 경우 최소 용량, 최소 확보 용량

memory: 256Mi

cpu: 250m

max: #직접 설정할 경우 값

memory: 1024Mi

cpu: 1000m

min:

memory: 128Mi

cpu: 125m

maxLimitRequestRatio: # Request와 Limit의 차이 허용 비율 2배, 오버 커밋을 피할 수 있다.

memory: 2

cpu: 2

vi sample-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: sample-pod

spec:

containers:

- name: nginx-container

image: nginx:1.16

vi sample-pod-overratio.yaml

apiVersion: v1

kind: Pod

metadata:

name: sample-pod-overratio

spec:

containers:

- name: nginx-container

image: nginx:1.16

resources:

requests:

cpu: 125m

limits:

cpu: 500m

안됨

cpu의 limit을 250으로 줄이고 다시 apply 해본다.

파드 스케쥴

vi pod-schedule.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-schedule-metadata

labels:

app: pod-schedule-labels

spec:

containers:

- name: pod-schedule-containers

image: nginx

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: pod-schedule-service

spec:

type: NodePort

selector:

app: pod-schedule-labels

ports:

- protocol: TCP

port: 80

targetPort: 80

apply 후

# vi pod-nodename.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-nodename-metadata

labels:

app: pod-nodename-labels

spec:

containers:

- name: pod-nodename-containers

image: nginx

ports:

- containerPort: 80

nodeName: worker2

---

apiVersion: v1

kind: Service

metadata:

name: pod-nodename-service

spec:

type: NodePort

selector:

app: pod-nodename-labels

ports:

- protocol: TCP

port: 80

targetPort: 80

수동으로 worker2에 배치하였다.

[root@master schedule]# kubectl label node worker1 tier=dev

node/worker1 labeledworker1에 dev라는 label을 부여하였다.

원래도 라벨이 있긴 하다.

worker1의 끝에 보면 내가 추가한 라벨이 있다.

vi pod-nodeselector.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-nodeselector-metadata

labels:

app: pod-nodeselector-labels

spec:

containers:

- name: pod-nodeselector-containers

image: nginx

ports:

- containerPort: 80

nodeSelector:

tier: dev

---

apiVersion: v1

kind: Service

metadata:

name: pod-nodeselector-service

spec:

type: NodePort

selector:

app: pod-nodeselector-labels

ports:

- protocol: TCP

port: 80

targetPort: 80[root@master schedule]# kubectl get nodes --show-labels

NAME STATUS ROLES AGE VERSION LABELS

master Ready master 23h v1.19.16 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=master,kubernetes.io/os=linux,node-role.kubernetes.io/master=

worker1 Ready <none> 23h v1.19.16 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=worker1,kubernetes.io/os=linux,tier=dev

worker2 Ready <none> 23h v1.19.16 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=worker2,kubernetes.io/os=linux

[root@master schedule]# kubectl apply -f pod-nodeselector.yaml

error: error validating "pod-nodeselector.yaml": error validating data: ValidationError(Pod.spec): unknown field "ports" in io.k8s.api.core.v1.PodSpec; if you choose to ignore these errors, turn validation off with --validate=false

[root@master schedule]# vi pod-nodeselector.yaml

[root@master schedule]# kubectl label nodes worker1 tier-

node/worker1 labeled

[root@master schedule]# kubectl get nodes --show-labels

NAME STATUS ROLES AGE VERSION LABELS

master Ready master 23h v1.19.16 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=master,kubernetes.io/os=linux,node-role.kubernetes.io/master=

worker1 Ready <none> 23h v1.19.16 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=worker1,kubernetes.io/os=linux

worker2 Ready <none> 23h v1.19.16 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=worker2,kubernetes.io/os=linux

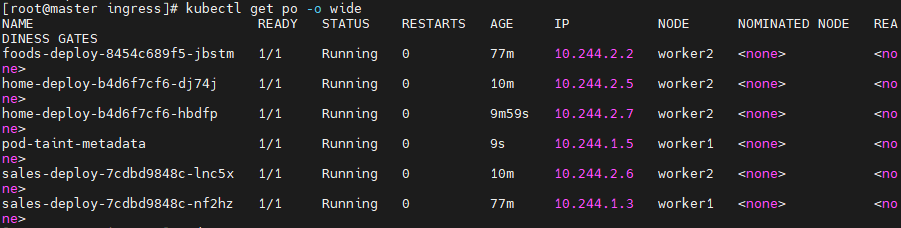

taint

pod를 만드는 일에 제외시킨다. - 자동스케줄 하지 못하도록 한다.

[root@master schedule]# kubectl taint node worker1 tier=dev:NoSchedule

node/worker1 tainted

Taints를 보면 확인할 수 있다.

자동스케줄이 되지 않는다

ingress-deploy.yaml에서 replica의 개수를 2개로 늘린다.

퍄

apply 후 확인해 본다

최근에 생성된 pod중 worker1의 node는 없다.

vi pod-taint.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-taint-metadata

labels:

app: pod-taint-labels

spec:

containers:

- name: pod-taint-containers

image: nginx

ports:

- containerPort: 80

tolerations: # 여기서 pod를 생성시킬 수도 있다.

- key: “tiger” # tier=dev:NoSchedule 와 같음

operator: “Equal”

value: “cat”

effect: “NoSchedule”

---

apiVersion: v1

kind: Service

metadata:

name: pod-taint-service

spec:

type: NodePort

selector:

app: pod-taint-labels

ports:

- protocol: TCP

port: 80

targetPort: 80만약 안되면 큰따옴표를 수정한다.

만약 worker2에도 taint를 주면 어떻게 될까?

metadata가 worker1으로 들어간다

사실상 똑같이 랜덤하게 들어가는 셈

nnn개의 노드가 있을 때 worker1, 2만 taint를 줘서 두 군데 중 한 군데만 .matadata를 받을 수 있도록 활용할 수 있다.

클러스터 업그레이드

master node

# yum list --showduplicates kubeadm --disableexcludes=kubernetes

# yum install -y kubeadm-1.20.15-0 --disableexcludes=kubernetes

# kubeadm version

# kubeadm upgrade plan

이 부분을 따라 친다.

# yum install -y kubelet-1.20.15-0 kubectl-1.20.15-0 --disableexcludes=kubernetes

# systemctl daemon-reload

# systemctl restart kubelet큐블렛 업데이트하고 리스타트 한다

worker node

# yum install -y kubeadm-1.20.15-0 --disableexcludes=kubernetes

# kubeadm upgrade node각각의 worker node에서 실행

# kubectl drain worker1 --ignore-daemonsets --force

# kubectl drain worker2 --ignore-daemonsets --force이 부분은 master node에서 실행해준다.

# kubectl drain worker1 --ignore-daemonsets --force

# kubectl drain worker2 --ignore-daemonsets --force

# yum install -y kubelet-1.20.15-0 kubectl-1.20.15-0 --disableexcludes=kubernetes

# systemctl daemon-reload

# systemctl restart kubelet

# kubectl uncordon worker1

# kubectl uncordon worker2

# kubectl get node이 부분은 master node에서 실행해준다.

'Infra > Kubernetes' 카테고리의 다른 글

| kubernetes 8 (0) | 2022.07.22 |

|---|---|

| kubernetes 7 (0) | 2022.07.21 |

| kubernetes 4 (0) | 2022.07.19 |

| kubernetes 3 (0) | 2022.07.18 |

| kubernetes 2 (0) | 2022.07.16 |